Using a pre-populated Database with Testcontainers

June 22, 2020

This is the third post on a series of posts about Testcontainers. This post expands on the second post so it’s recommended that you read it before this one since we’re building on the application that was created on previous posts.

The Problem

Let’s suppose you have a database that’s very big. That requires a huge amount of data and it takes a lot of time to prepare it before each test. To maintain consistency over tests you could running keep all this setup before each test class, however it can slow your tests dramatically.

Other option would be to not dispose of the database container after each test class. This way the setup would be done only once for all the tests. This approach however, comes with a great drawback, you have to manage the state of the database so that your tests remain consistent.

A third option, which I’ll explore here is to have all the setup data already created inside the docker image and then you can quickly spin up this clean state before each test class without compromising speed.

So, let’s take the simple add_users.sql:

CREATE TABLE users(

id INT,

first_name VARCHAR,

last_name VARCHAR

);

INSERT INTO users (id, first_name, last_name) VALUES (2, 'Addrianne', 'Lenker'), (3, 'Phoebe', 'Bridges');And try to build an image with this data already created inside it.

Using Custom Entrypoints

The default PostgreSQL image has something similar to initEntrypoint of Testcontainers. During startup it

will scan .sql and .sh files on /docker.entrypoint.d and it will run those files during the container

startup. The trick here is to find a way to run this entrypoint during the build part of the container.

However that’s not exactly what we want here. We want to be able to run those .sql files at the build

phase of the container. Searching online, this issue here provides some clues to achieve what we

want. It describes a way to check if a database already exists when we’re building our container, and if there’s

not one we can start the database server and insert data on it.

That sounds promising, so we’re going to extend the postgres docker image for that and add this custom entrypoint which will create our data.

create a database folder on the root of your project. Create the file custom-entrypoint.sh containing

the following code which was extracted from the issue mentioned above:

#!/usr/bin/env bash

set -Eeo pipefail

# Example using the functions of the postgres entrypoint to customize startup to always run files in /always-initdb.d/

source "$(which docker-entrypoint.sh)"

docker_setup_env

docker_create_db_directories

# assumption: we are already running as the owner of PGDATA

# This is needed if the container is started as `root`

#if [ "$1" = 'postgres' ] && [ "$(id -u)" = '0' ]; then

# exec gosu postgres "$BASH_SOURCE" "$@"

#fi

if [ -z "$DATABASE_ALREADY_EXISTS" ]; then

docker_verify_minimum_env

docker_init_database_dir

pg_setup_hba_conf

# only required for '--auth[-local]=md5' on POSTGRES_INITDB_ARGS

export PGPASSWORD="${PGPASSWORD:-$POSTGRES_PASSWORD}"

docker_temp_server_start "$@" -c max_locks_per_transaction=256

docker_setup_db

docker_process_init_files /docker-entrypoint-initdb.d/*

docker_temp_server_stop

else

docker_temp_server_start "$@"

docker_process_init_files /always-initdb.d/*

docker_temp_server_stop

fi

exec postgres "$@"Then, also inside the database folder, let’s separate add_users.sql in two files as it’s more

sense to separate the creation of the table and the insertion of test data. Create a file named

01_create_tables.sql containing:

CREATE TABLE users(

id INT,

first_name VARCHAR,

last_name VARCHAR

);and another file named 02_add_test_users.sql containing:

INSERT INTO users (id, first_name, last_name) VALUES (2, 'Addrianne', 'Lenker'), (3, 'Phoebe', 'Bridges');The naming is important here because the files are read on alphabetic order. Now to bring everything together

create a Dockerfile with the following:

FROM postgres:12.3-alpine

ENV POSTGRES_USER=postgres

ENV POSTGRES_PASSWORD=postgres

ENV POSTGRES_DB=test

COPY ./custom-entrypoint.sh /usr/local/bin/custom-entrypoint.sh

COPY ./01_create_tables.sql /docker-entrypoint-initdb.d/

COPY ./02_add_test_users.sql /docker-entrypoint-initdb.d/

RUN chmod +x /usr/local/bin/custom-entrypoint.sh

USER postgres

RUN custom-entrypoint.sh postgres || exit 0

ENTRYPOINT [ "custom-entrypoint.sh" ]

# using this CMD works so that docker run ... doesnt require a command override,

# only downside is it calls SET for this parameter on every psql issued to the container

CMD [ "-c", "max_locks_per_transaction=512" ]The Dockerfile first sets the credentials that we’re going to use on our tests. Then it copies our

script to the bin folder of the container and our .sql scripts to the /docker-entrypoint-initdb.d

folder.

Then it runs our custom entrypoint, which will run those scripts if a database is not already created, which on build time it is not (unless you mount a volume with the data, but that’s not the case here).

Finally, build the image so we can use on our code by running docker build -t demo/testdb .

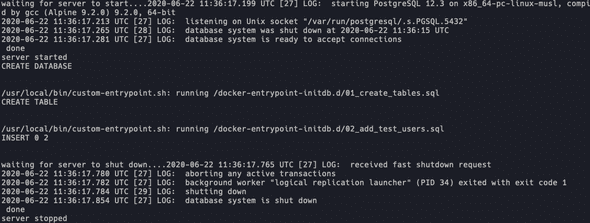

You should see on the logs the sql code being executed like this:

Updating tests to use the new image

Since we’re using a custom image, we need to specify that on the code. Edit DatabaseTest.kt and replace

the line

class KPostgresContainer: PostgreSQLContainer<KPostgresContainer>()with

class KPostgresContainer: GenericContainer<KPostgresContainer>("demo/testdb:latest")We also don’t need to specify the username and password since we’re already doing that on the Dockefile. So change the container initialization to just:

@Container

val container = KPostgresContainer()

.withExposedPorts(5432)That’s it for the code changes. If you run your tests now they should pass and the sql scripts are run

only once on the creation of the Dockerfile itself, that can save a lot of time if have a lot of scripts

to run. You can check the code for this post here. Just clone and checkout the custom-entrypoint tag.

Hope you enjoyed these series of posts! Testcontainers is a very handy tool.